The desktop is dead, that’s something you’d think was pretty much cemented. Not for everyone of course, like those in corporate offices and certainly not for gamers – they need the space for those monstrous GPUs – but for the average consumer? Definitely.

Just look at the climate we’re in at the moment. The family PC is being replaced by personal smartphones and tablets and even those that do still use one often have a laptop propped up on the desk instead of a tower/monitor combination – it saves space and it’s mobile, for those not doing video editing or high-def gaming, that’s a perfect combination.

But Intel isn’t ready to see the age of the desktop computer end, it wants to reinvent it. The cynical among you are no doubt pointing out the fact that Intel has lost some ground to AMD in the mobile sector, despite maintaining its performance crown in the desktop CPU market. Sure, that’s probably why it’s so keen to bring back the desktop, but it does have some smart ideas. On top of that too, the desktop market, like the banks at the last economic collapse, is just too big to let it fail. With such a monstrous install base, Intel is hoping to re-purpose that audience, rather than try and capture a new one.

That, and Intel really wants to be our girlfriend

A big power play Intel is looking to make, is changing the face of the desktop. That means altering its form factor, its shape, size and look, to make it feel more like a contemporary product. In the same fashion as the sports cars of yesteryear look ancient when placed alongside the sleek, hybrid super cars of today, the old-guard desktops (that aren’t gamer orientated, or modded by our talented readerbase) just don’t fit in with a world of smart connected devices.

So Intel is looking to push all-in-one systems. Monitor, internal hardware, speakers, peripheral interface (touch), everything. This style is also going to be multi-user orientated, with multi-touch interfaces and large displays to encourage interaction.

However, more traditionally, Intel is also looking to push mini-PCs. Very small form factor, but powerful boxes, similar in some ways to a Steambox. The idea is to get PCs and specifically desktop PCs, into places they haven’t been before, partially by utilising smaller chassis and partly through innovative interfaces, like voice recognition, 3D gesture sensing cameras and bio-authentication.

To make sure these systems are ready to go on a moment’s notice too, Intel is also pushing its new Ready Mode technology, which is a new super-low power mode in its 4th gen iseries CPUs. Through software and board level optimisations, Intel’s partners should be able to create systems that are able to enter incredibly lower powered states, without shutting down or logging off, meaning they’re always ready for you, without using boat loads of power.

Ready Mode will also sync with 3rd party applications to make certain tasks automated. Entering WiFi range could have pictures automatically downloaded from your phone and stored on the desktop hub.

All of this though, is going to be powered by the new generation of processors.

Intel’s desktop CPU line will remain largely unchanged in hierarchy; Pentium and Celeron serve the entry level, Core i3 and i5 models offer an extra bit of grunt for the mainstream, the performance segment relies upon i5 and i7 chips, and Core i7 (namely the HEDT variant) will sit atop the rankings.

Keen to point out that Extreme Edition will live on, Intel showed a slide which outlines the current flagship (Core i7 4960X) part’s 20x performance gain over its 2003 ancestor – the Pentium 4 EE.

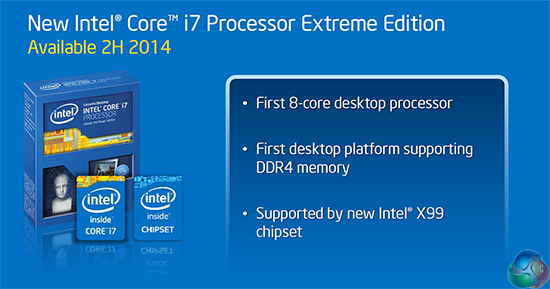

Octa-core and DDR4 for the consumer market.

Keeping on the topic of the HEDT and Extreme Edition parts, 2014 will see commercial availability for Intel’s first eight-core desktop processors. Set to enter the scene in the second half of 2014 (many web sources suggest Computex in early June as a likely launch time frame) Haswell-E, as many enthusiasts currently know it, and the new X99 chipset will set the foundation for the first DDR4-supporting desktop platform.

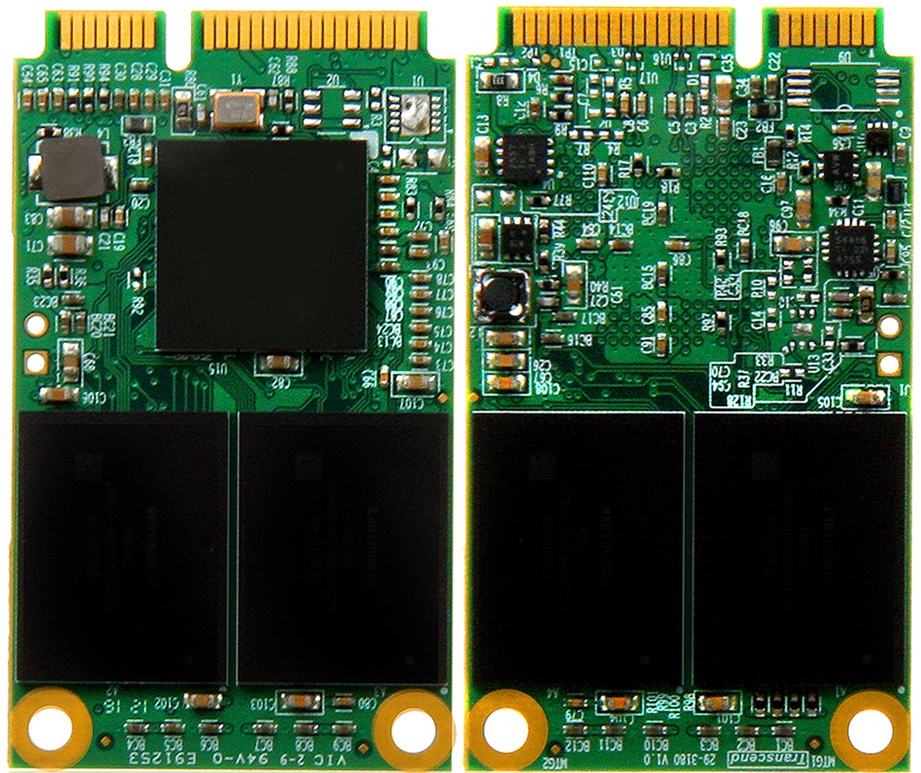

Information surrounding the processors and the X99 chipset is still scarce, although a $1000 price tag for the flagship CPU seems a safe bet. Sources suggest that the flagship chip will feature 20MB of L3 cache, 40 PCIe 3.0 lanes, a quad channel DDR4 memory controller, and manufacturing using a 22nm process. The X99 chipset is likely to offer support for a larger number of SATA 6Gbps and USB 3.0 connections than X79. Other additions could include the M.2 storage interface.

Eight cores, a new HEDT chipset, and support for DDR4 memory. Although scepticism of Intel’s bold – reinvention – claims was understandable, the aforementioned parameters add belief to the chipmaker’s suggestions.

If Intel’s plans to reinvent the desktop are to come to fruition, the company understands the importance of delivering in each segment of the market.

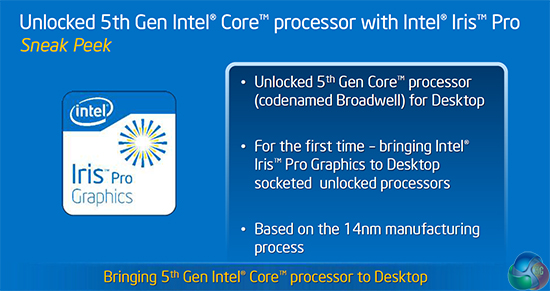

Representing a ‘tick’ in Intel’s ‘tick-tock’ cycle, Broadwell will serve the mainstream market later this year. In typical fashion, Broadwell will feature a die shrink to a 14nm manufacturing process – down from the 22nm used by Haswell.

Iris Pro Graphics and 14nm manufacturing – ‘tick’.

One of Broadwell’s key features is the inclusion of Intel’s Iris Pro graphics in unlocked desktop processors. Iris Pro has been stomping its authority in the notebook scene, proving its strengths in machines such as the MacBook Pro. The graphics hardware has also found its way into Intel’s desktop CPUs, such as the 4770R used in Gigabyte’s BRIX Pro. Irrelevant of the arguments for and against on-chip graphics, Iris Pro looks to provide a sizeable graphics performance upgrade over the current unlocked Haswell chips, which could be particularly useful in a SFF environment.

Launch dates for the 5th generation Intel core processors are still unclear. If previous Intel refreshes are anything to go by, the upcoming processors are likely to be launched alongside a new-and-improved chipset. Some companies have already started showing off their products based on Intel’s next generation mainstream chipset.

A focus on the desktop market wouldn’t be a fair claim if enthusiasts and overclockers were not included. Reaching out to the overclocking community, many of whom have been critical of Intel’s desktop CPU decisions in recent years, an update to the 4th generation Core processors is set for mid 2014.

Codenamed Devil’s Canyon, the updated processors will feature improved thermal interface material and updated packaging materials over their Haswell predecessors. Supported by the upcoming 9 series chipsets and geared towards overclocking, the Devil’s Canyon chips look set to build on one of Haswell’s (and Ivy Bridge’s) biggest flaws – its terrible contact between the silicon and heatspreader.

While Devil’s Canyon isn’t particularly big news with Broadwell and Haswell-E on the horizon, it does serve as an indication that Intel could indeed be serious about its focus on the desktop market – a category that overclockers and enthusiasts fall into.

The final processor-related announcement from Intel comes from a Pentium part. Celebrating twenty years of the Pentium brand, Intel will be releasing a Quick Sync-supporting, multiplier unlocked Pentium chip in mid 2014. The Pentium Anniversary Edition processor will drop inside 8 and 9 series motherboards.

So what does Intel’s latest press release tell us? It serves as proof that the desktop market is still big business, even if it does exist in an ever-evolving form. It also outlines Intel’s interest in the desktop market and how the company plans to reinvent it.

KitGuru says: The proof is in the pudding, so to speak. But if Intel does indeed deliver on many of its outlined promises, the future for desktop may not be as gloomy as one would be forgiven for thinking.